Join Lance Dacy and Brian Milner as they discuss the use of metrics in an Agile environment to ensure optimal performance without taking things in the wrong direction.

Overview

In this episode of the Agile Mentors podcast, Lance Dacy joins Brian to delve into the intricacies of utilizing metrics in software development to ensure optimal performance while avoiding incentivizing adverse behaviors.

Listen in as he walks us through the three tiers of metrics that are crucial for Agile teams to consider in order to stay on course.

He’ll share the tools required to gain a holistic understanding of an individual's performance and how leadership styles and stakeholders influence team-level metrics.

Plus, a look at the common challenges that teams may encounter during their Agile adoption journey and how to overcome them.

Listen now to discover:

[01:18] - Lance Dacy is on the show to discuss metrics.

[02:09] - Brian asks, are there ‘good’ ways to track performance?

[02:32] - Lance shares why Agile doesn’t really lend itself to tracking performance.

[03:57] - How to handle performance reviews.

[04:32] - Lance shares the best way to measure individual performance.

[06:40] - Measuring team contribution vs. standalone rockstar.

[07:48] - What Ken Schwaber and Jeff Sutherland say about the completeness of the Scrum Framework and why having a superhero on your team is bad.

[09:45] - Lance shares the 3 tiers of metrics to measure when working as an Agile team to be sure their team is going in the right direction.

[11:09] - Using tangible business-level metrics such as time to market for products, NPS, and support call volume to evaluate performance.

[12:20] - How metrics, such as the number of work items completed per month, and cycle time, can be used to evaluate performance at a product level in an Agile environment.

[14:10] - Lance shares standard metrics such as velocity, backlog churn, and work-in-process that can be used to evaluate things at the team level.

[14:45] - Brian shares the importance of having a broader perspective to avoid having a distorted view of performance.

[16:53] - How using tools such as Ishikawa (fishbone) diagrams can help you identify the root cause of the problem instead of the apparent cause.

[17:22] - Individual velocity and other big metrics to avoid.

[19:02] - How the balanced scorecard can help managers use ALL the information available to develop a comprehensive understanding of an individual's performance.

[19:25] - The detrimental effects of using the wrong metrics to evaluate an individual's contribution.

[21:29] - Brian shares the story of how a manager's bug squashing endeavor led to incentivizing the wrong behavior

[22:31] - Lance references Stephen Denning's statement and reminds us that assumption testing is what developers do every day.

[24:00] - Referencing the State of Agile Report statistics on what's stalling your transformation to Agile.

[25:15] - Lance shares a behind-the-scenes look at how team-level metrics are affected by leadership styles and stakeholders.

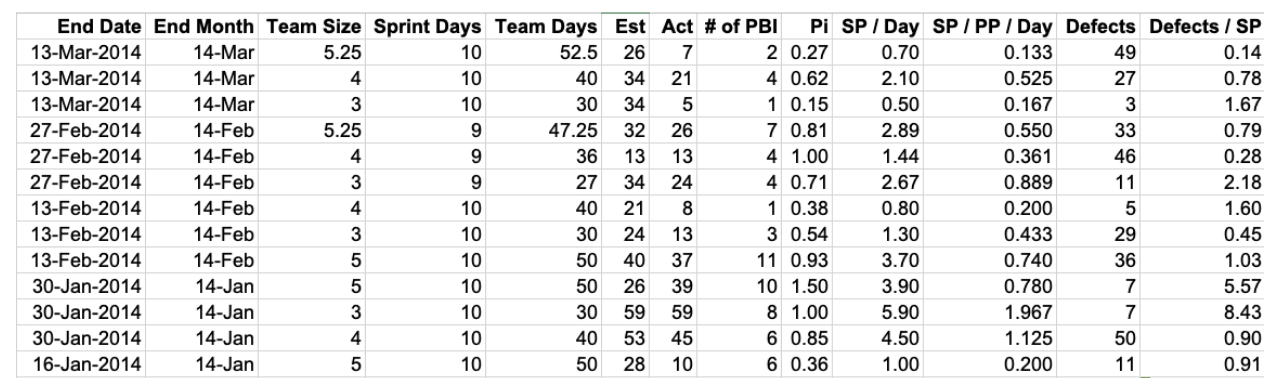

[27:05] - Lance shares the spreadsheet he's been using to track data for a Scrum team for over 5 years to understand why the team is not predictable and what they can do to improve.

[31:38] - Got metrics management questions? Reach out to Lance.

[31:46] - Why it’s imperative that you think of software development as R&D rather than manufacturing to arrive at the right metrics measurements.

[33:26] Continue the conversation in The Agile Mentors Community.

References and resources mentioned in the show:

- Join the More than 24k People Who've Trained to Succeed With Mountain Goat Software

- Mountain Goat Software Certified Scrum and Agile Training Schedule

- #30: How to Get the Best Out of the New Year with Lance Dacy

- #31: Starting Strong: Tips for Successfully Starting with a New Organization with Julie Chickering

- State of Agile Report

- HBR's Embrace Of Agile

- The Agile Mentors Community

Additional metrics resources mentioned by Lance

Agile Metrics

- Business outcomes, product group metrics, unit metrics)

- KPI/OKR (Business Outcomes)

- Time to market, NPS, Support Call Volume, Revenue, Active Account, New Customer Onboarding Time, Regulatory Violations)

- Product Group Metrics

- Work items completed per unit of time (quarterly)

- % of work in active state vs. wait state

- Cycle time of work times (idea to done)

- Predictability (% of work items that reach ready when planned)

- Unit metrics

- Velocity, backlog churn, work in process, team stability Metrics Spreadsheet

- Team Size

- Tracking the size of our cross-functional team (typically Dev and QA), allows us to pair that number with velocity to play “what-if” scenarios in the future. Whether you count half of a person if shared, or whole, keeping it consistent throughout your tracking is what is important. Most teams simply count the number of developers and testers.

- Team Days

- Tracking the iteration length is also helpful in understanding a team’s performance. If the team has a 2 week sprint, then usually that is 9 development days of actual work. The 10th day is set aside for sprint review, retrospective, and planning.

- Committed

- Tracking what the team committed to completing within a sprint is crucial to understanding their predictability. The are the most uneducated at the beginning of the sprint and tracking what they think they can complete helps us in long term planning.

- Completed

- Tracking what the team completed is actually just tracking velocity above, but comparing it what they committed helps us understand their predictability index.

- Predictability Index (Pi)

- Software development is complex, risky, and uncertain. A skill that is sought after in this type of environment is predictability. The better we are at understanding what we can accomplish, then finishing what we said we would accomplish builds trust with our management team and customers. If we aren’t very good, tracking this metric often helps us get back to good by committing to less or more depending on our index.

- Example:

- Completed Items / Committed Items = Predictability Index (Pi)

- 25 Story Points / 20 Story Points = 125% 20 Story Points / 25 Story Points = 80%

- Just because a team has a high Pi, does not mean they are good at predictability. Don’t let high and low numbers fool you, focus on the variance from 100% instead of the actual number. An arbitrary number to shoot for is +/- 15% Pi (85% or 115%).

- Story Points / Per Day (SPD)

- Story points per day is just that, tracking how many story points per day of the sprint did we complete (Completed / Team Days).

- Story Points / Per Day / Per Person (SP/PD/PP)

- This perhaps is the most useful metric to capture throughout the process. Most of our teams do not have the luxury of maintaining a consistent size or make-up. Inevitably over the course of a few months, the team make-up will change. Once the teams change, velocity has to be reset.

- In addition, we may actually change our sprint duration over a long period of time (don’t change it each sprint). Once we change sprint lengths, it can jeopardize our pure metrics, velocity has to be reset.

- However, over all of our teams in a product, if we can capture the SP/PD/PP that our teams complete on average, we can begin to play “what-if” scenarios in long- term planning.

- Example:

- Completed / (Team Size * Sprint Days)

- 24 / (4 * 9) = 0.67

- You can then average that number over 4-6 sprints or even the year.

- Example:

- Defects

- While we understand that we won’t ever likely have a zero defect product, it is useful to track how many defects our teams are creating over time. There are usually 2 types of defects, internal and external.

- Internal

- Our definition of done should at minimum include that testing is taking place during the sprint with the idea that we would not allow a story to be called DONE if it had remaining defects. As such, an internal defect are the ones that were created while working on a backlog item in the sprint, that we have fixed before calling the item DONE.

- External

- External defects are those that have “escaped” our development process and were not discovered during our testing. In a sense, our customer discovered the defect and the work item will become a new backlog item for a sprint.

- Warranty

- We should strive to have the warranty concept built into our process. If you bought a car yesterday and the radio fell out, you could take it back and they would fix it fairly quickly. Our customers deserve the same service. Don’t manage a defect backlog, get used to fixing escaped defects immediately, while they are fresh on your mind (right after a sprint). It doesn’t take a long time to fix defects, it takes a long time to find them once identified by a customer.

- Defects per Story Point

- Tracking defects per story point help to understand velocity a little better. If you have a team that has drastically increased its velocity, have the defects have increased along with it? Defects per story point help us understand the relationship between a velocity and defects created.

Want to get involved?

This show is designed for you, and we’d love your input.

- Enjoyed what you heard today? It would be great if you left a rating and a review. It really helps, and we read every single one.

- Got an agile subject you’d like us to discuss or a question that needs an answer? Share your thoughts with us at podcast@mountaingoatsoftware.com

This episode’s presenters are:

Brian Milner is SVP of coaching and training at Mountain Goat Software. He’s passionate about making a difference in people’s day-to-day work, influenced by his own experience of transitioning to Scrum and seeing improvements in work/life balance, honesty, respect, and the quality of work.

Lance Dacy, known as Big Agile, is a dynamic, experienced management and technical professional with the proven ability to energize teams, plan with vision, and establish results in a fast-paced, customer-focused environment. He is a Certified Scrum Trainer® with the Scrum Alliance and has trained and coached many successful Scrum implementations from Fortune 20 companies to small start-ups since 2011. You can find out how to attend one of Lance’s classes with Mountain Goat Software here.