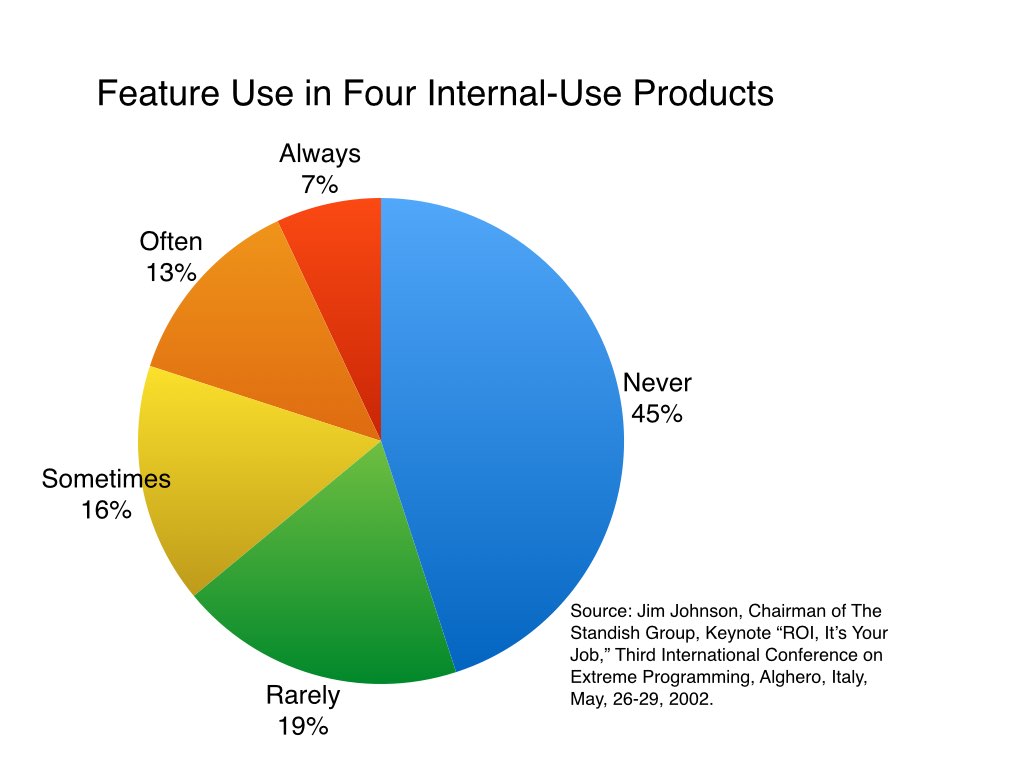

A very oft-cited metric is that 64 percent of features in products are “rarely or never used.” The source for this claim was Jim Johnson, chairman of the Standish Group, who presented it in a keynote at the XP 2002 conference in Sardinia. The data Johnson presented can be seen in the following chart.

Johnson’s data has been repeated again and again to the extent that those citing it either don’t understand its origins or never bothered to check into them.

The misuse or perhaps just overuse of this data has been bothering me for a while, so I decided to investigate it. I was pretty sure of the facts but didn’t want to rely solely on my memory, so I got in touch with the Standish Group, and they were very helpful in clarifying the data.

The results Jim Johnson presented at XP 2002 and that have been repeated so often were based on a study of four internal applications. Yes, four applications. And, yes, all internal-use applications. No commercial products.

So, if you’re citing this data and using it to imply that every product out there contains 64 percent “rarely or never used features,” please stop. Please be clear that the study was of four internally developed projects at four companies.

Last update: November 13th, 2016